Peifeng WangI am a Research Scientist at Meta where I work on reinforcement learning for reasoning. I did my PhD in Computer Science at the University of Southern California (USC), where I was advised by Prof. Muhao Chen and Prof. Xiang Ren. Email / GitHub / Google Scholar / LinkedIn / CV |

|

ResearchI'm broadly interested in LLM post-training and RL for reasoning. My current research focus includes:

|

|

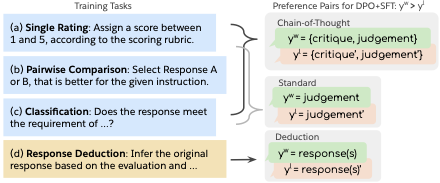

Direct Judgement Preference OptimizationPeifeng Wang, Austin Xu, Yilun Zhou, Caiming Xiong, Shafiq Joty EMNLP, 2025 arxiv / blog / A multifaceted generative judge model that generalizes across various evaluation tasks |

|

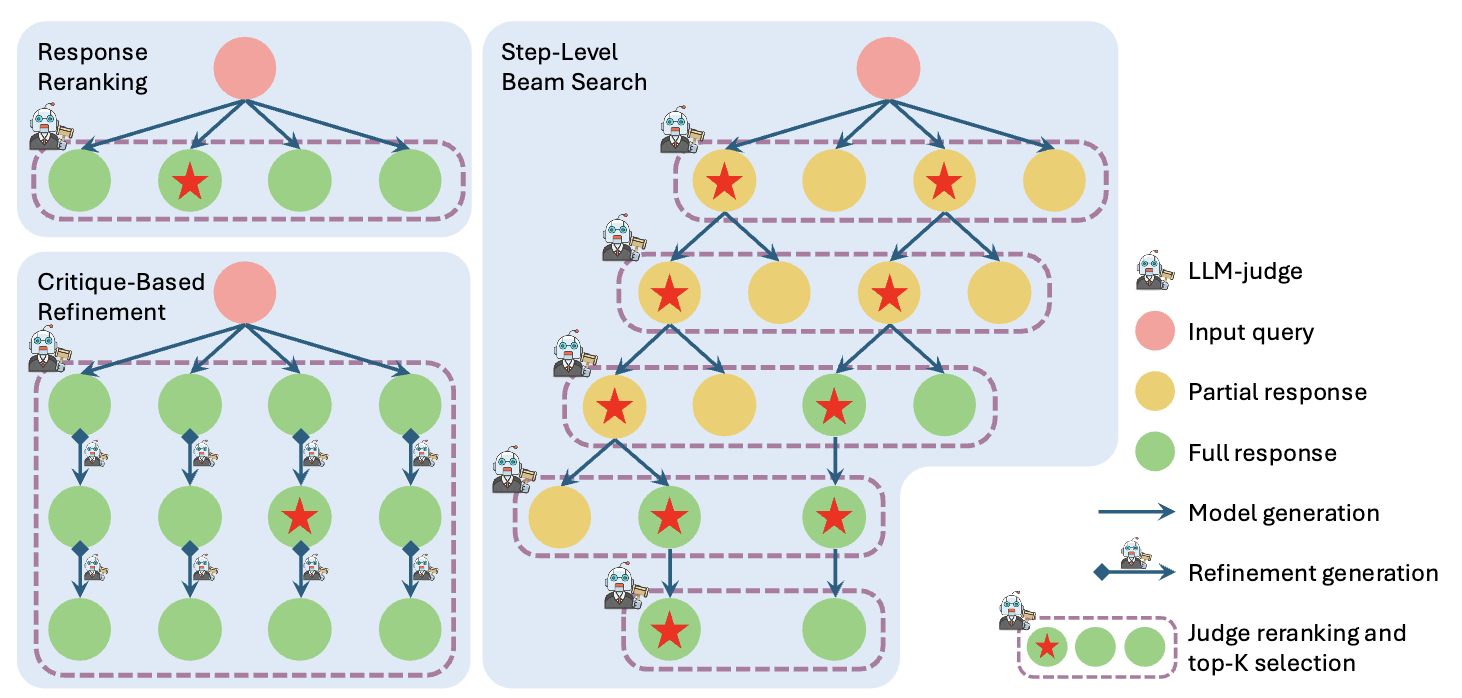

Evaluating judges as evaluators: The benchmark of llm-judges as test-time scaling evaluatorsYilun Zhou, Austin Xu, Peifeng Wang, Caiming Xiong, Shafiq Joty ICML, 2025 arxiv / code / A benchmark that evaluates the performance of LLM-judges in reasoning and instruction following across various test-time scaling settings. |

|

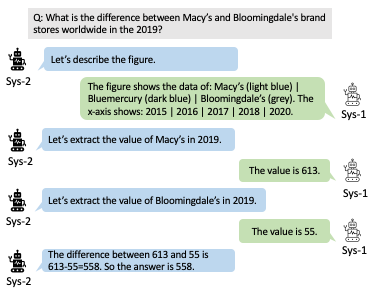

DOMINO: A Dual-System for Multi-step Visual Language ReasoningPeifeng Wang, Olga Golovneva, Armen Aghajanyan, Xiang Ren, Muhao Chen, Asli Celikyilmaz, Maryam Fazel-Zarandi preprint, 2023 arxiv / code / A dual-system for answering a complex question over a chart step-by-step with LLM and a vision module. |

|

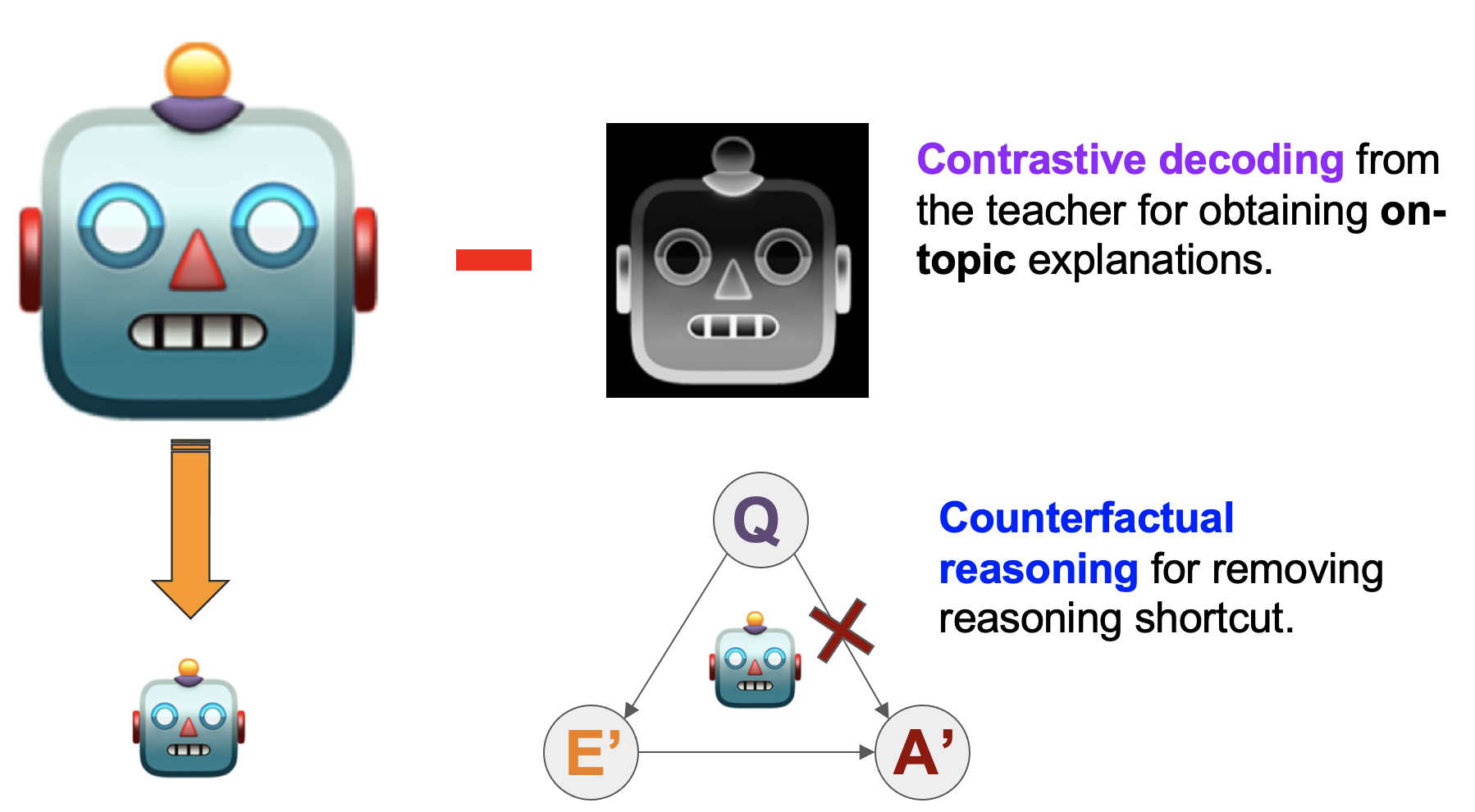

SCOTT: Self-Consistent Chain-of-Thought DistillationPeifeng Wang, Zhengyang Wang, Zheng Li, Yifan Gao, Bing Yin, Xiang Ren Annual Meeting of the Association for Computational Linguistics, 2023 Outstanding Paper Award arxiv / code / blog / A faithful knowledge distillation method that learns a small, self-consistent Chain-of-Thought model from a large teacher model. |

|

PINTO: Faithful Language Reasoning Using Prompt-Generated RationalesPeifeng Wang, Aaron Chan, Filip Ilievski, Muhao Chen, Xiang Ren International Conference on Learning Representations, 2023 arxiv / code / An LM pipeline that rationalizes via prompt-based learning, and learns to faithfully reason over rationales. |

|

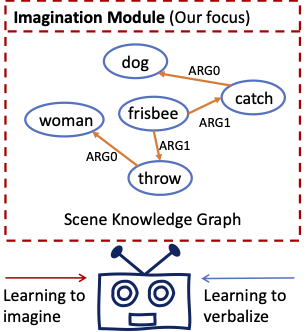

Contextualized Scene Imagination for Generative Commonsense ReasoningPeifeng Wang, Jonathan Zamora, Junfeng Liu, Filip Ilievski, Muhao Chen, Xiang Ren International Conference on Learning Representations, 2022 arxiv / code / An Imagine-and-Verbalize framework, which learns to imagine a scene knowledge graph (SKG), and leverage the SKG as a constraint when generating a plausible scene description. |

|

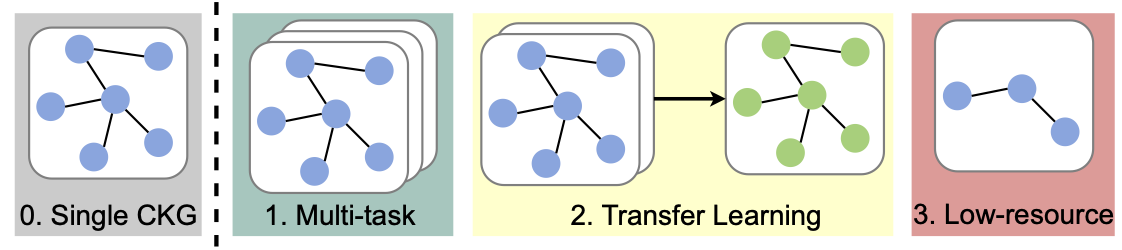

Do Language Models Perform Generalizable Commonsense Inference?Peifeng Wang, Filip Ilievski, Muhao Chen, Xiang Ren Annual Meeting of the Association for Computational Linguistics - Findings, 2021 arxiv / code / An analysis over the ability of LMs to perform generalizable commonsense inference, in terms of knowledge capacity, transferability, and induction |

|

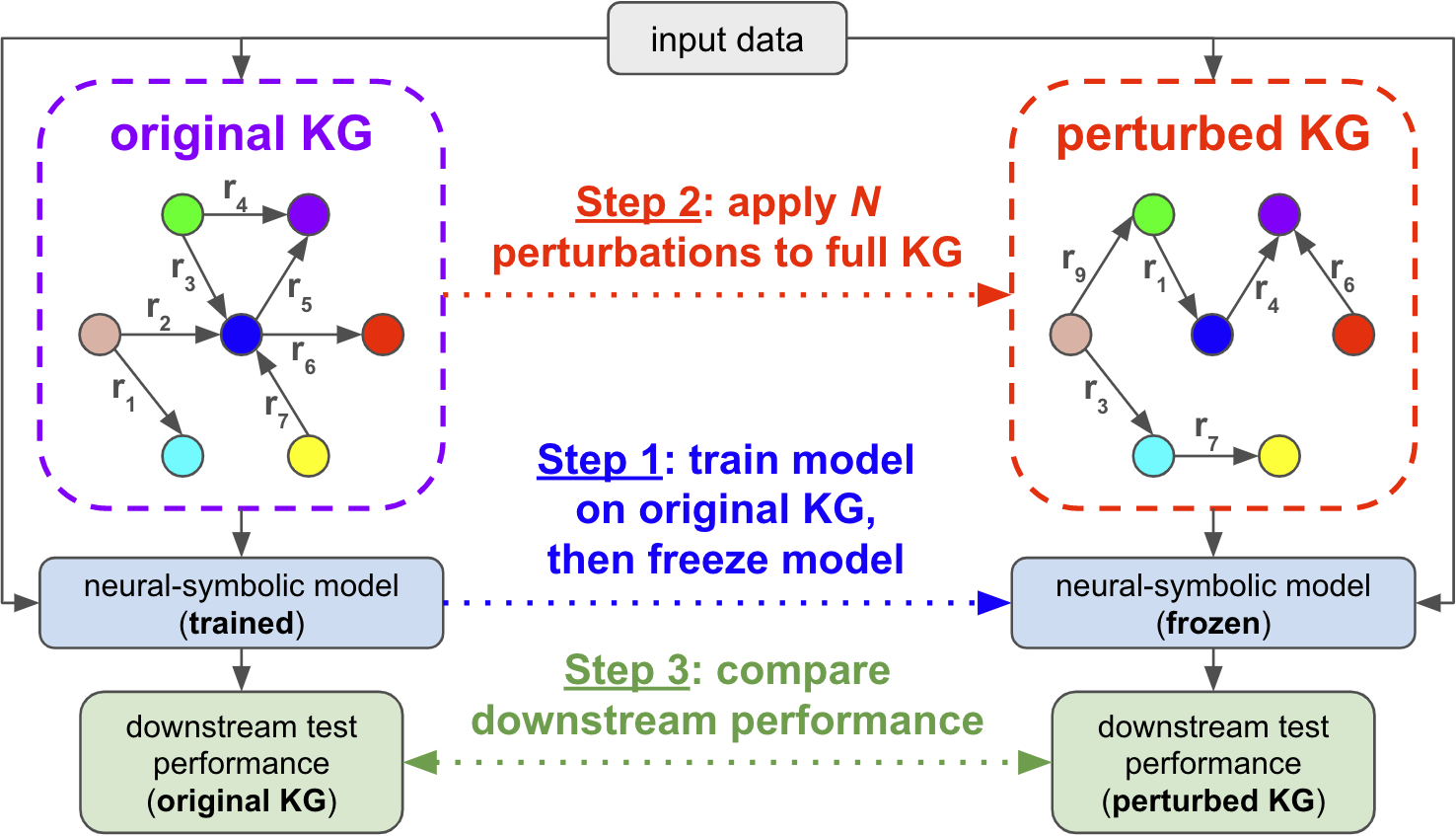

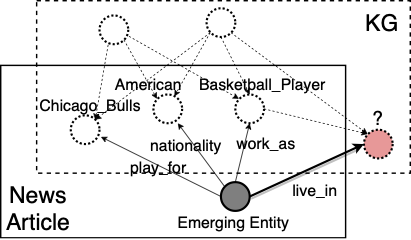

Learning to Deceive Knowledge Graph Augmented Models via Targeted PerturbationMrigank Raman, Siddhant Agarwal, Peifeng Wang, Aaron Chan, Hansen Wang, Sungchul Kim, Ryan Rossi, Handong Zhao, Nedim Lipka, Xiang Ren International Conference on Learning Representations, 2021 arxiv / code / An analysis over the effects of strategically perturbed knowledge graphs (KGs) on KG-augmented model performance on downstream tasks. |

|

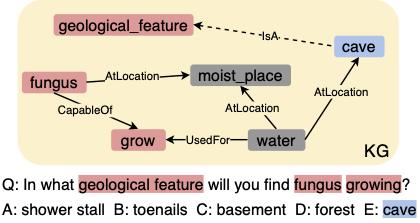

Scalable Multi-Hop Relational Reasoning for Knowledge-Aware Question AnsweringYanlin Feng, Xinyue Chen, Bill Yuchen Lin, Peifeng Wang, Jun Yan, Xiang Ren Conference on Empirical Methods in Natural Language Processing, 2020 arxiv / code / A novel knowledge-aware approach that equips pre-trained language models with a multi-hop graph relation network. |

|

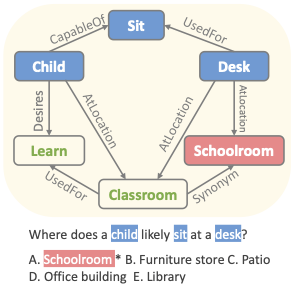

Connecting the Dots: A Knowledgeable Path Generator for Commonsense Question AnsweringPeifeng Wang, Nanyun Peng, Filip Ilievski, Pedro Szekely, Xiang Ren Conference on Empirical Methods in Natural Language Processing - Findings, 2020 arxiv / code / A general commonsense QA framework augmented with a knowledgeable path generator, which learns to connect a pair of concepts in text with a dynamic, and potentially novel, multi-hop relational path. |

|

Logic Attention Based Neighborhood Aggregation for Inductive Knowledge Graph EmbeddingPeifeng Wang, Jialong Han, Chenliang Li, Rong Pan AAAI Conference on Artificial Intelligence, 2019 arxiv / code / An inductive knowledge embedding model, Logic Attention Network, which aggregates entity neighbors with both rules- and network-based attention weights. |

|

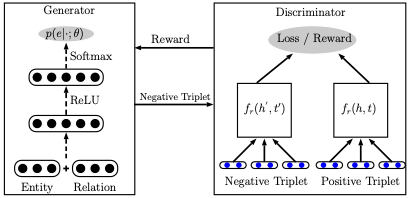

Incorporating GAN for Negative Sampling in Knowledge Representation LearningPeifeng Wang, Shuangyin Li, Rong pan AAAI Conference on Artificial Intelligence, 2018 arxiv / A knowledge representation learning framework based on Generative Adversarial Networks (GANs) that aims at obtaining high-quality negative samples for more effective knowledge embedding. |

Work Experience

|

|

Design and source code from Jon Barron's website |